Digitalisation – Automation culture and power: do we have the right balance?

Dr. P. Amaldi, Dr. M.S. Quercioli

Department of Psychology and Sport Sciences, University of Hertfordshire, Hatfield, UK

Senior Lecturer and module leader in Human Factors, Cognitive Ergonomics and Safety Management for the MSc Program in Industrial and Work Psychology and for undergraduate work occupational psychology.

and Dr. Anthony Smoker

Department of Risk Management & Societal Resilience, Lund University, Lund, Sweden

Research Scientist & Research lead, Lund University School of Aviation; Lecturer, MSc Human Factors & Systems Safety

Senior Lecturer and module leader in Human Factors, Cognitive Ergonomics and Safety Management for the MSc Program in Industrial and Work Psychology and for undergraduate work occupational psychology.

This study analyse critical views elicited from major stakeholders in civil aviation/air traffic management environments about the current status of automation and the roles that each stakeholder group can play in addressing areas of concern. A number of the main themes that emerged from our study of stakeholders’ views on automation echo those widely discussed since the time of Paul Fitts. Other themes reflected challenges raised by imminent or ongoing technological changes, such as those embedded in UAS operations or in major ATM innovations such as the SWIM programme.

While a detailed analysis of our study will be available in a later publication, in summary we focused our attention on a key overarching theme: the management of change caused by automation and technological innovation. According to aviation stakeholders, the chief responsibilities of regulatory bodies and the challenges they face in performing their objectives fall into two principal sub-themes:

Key Roles

- Define and set the limits of the system in terms of the boundaries of safe performance

- Set regulations that require staff training in new competencies integrating performance objectives and expectations

Challenges

- Proceduralise tasks to a high level of standardisation

- Identify stakeholder needs (both current and anticipated) and articulate in a common vision and strategy, using rules, policies, objectives and tool kits

- Create a regulatory environment where the process of data collection and event analysis is formalised

- Provide regulatory expertise and meet requirements for tailored solutions rather than a “one size fits all” approach

- Champion regulatory structures for approving innovations expertise in regulatory requirements to achieve objective based guidance interfacing regulations with technical and political developments

- Set up a regulatory framework that does not constrain innovation

Other considerations for regulatory bodies when managing change caused by automation and technological innovations are:

- Feedback on real work performance needs to be shared across industry to validate and /or improve design

- Assumptions and dependencies need to be communicated to operators and users, while awareness of the assumptions being made within each part of the industry needs to increase

- We need to communicate the assumptions & dependencies to those who use the automated system so that they are aware of its limitations

- Technical solutions must be integrated across organisational interfaces (pilots, ATCOs and ground crew)

While the debate reflected both longstanding and newer concerns, a commonly held view was that there was a lack of vision or understanding of the “big picture”.

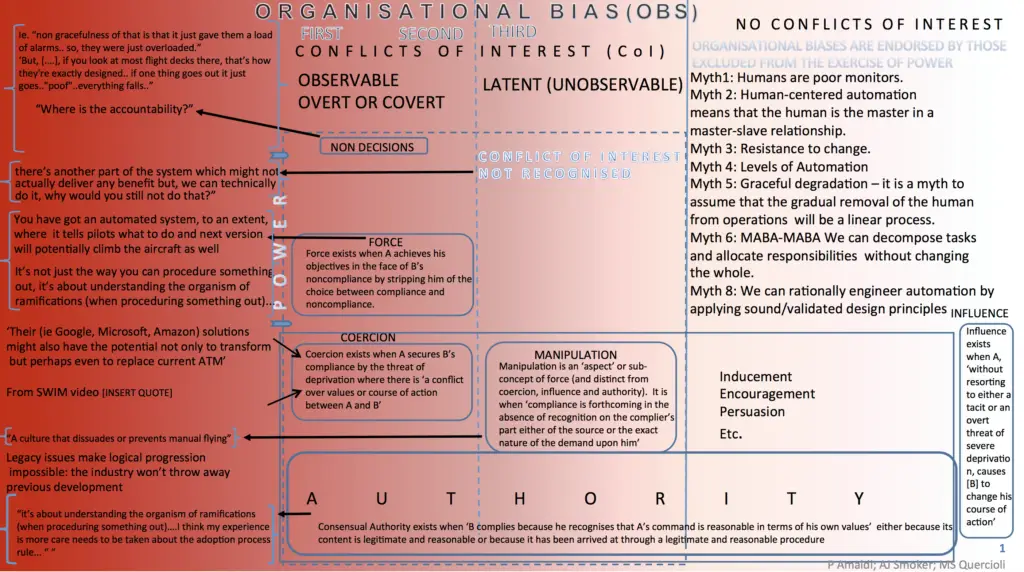

To gain a further insight into possible underlying forces that have shaped the debate on automation over the past 60 years, we first summarise a debate on the dimensions of power relations in public for a and explore how it might account for some of our findings. Lukes notably conceptualises three increasing “dimensions” of power that escalate from observable to latent before culminating in an apparent resolution of all ‘conflicts of interest’ (CoI). The notion common to all levels is that A exercises power over B when A affects or has the capacity to affect B in a manner contrary to B’s interests. The first level stresses decisions on key issues involving observable CoIs. The second level refines the CoI notion by asserting that all organisations have biases – around values, rituals, institutional procedures – that benefit certain interest groups. Such biases allow for the exercise of power that can influence agendas, with groups competing to set these agendas. Power is exercised by confining the scope of decision-making to relatively “safe issues”. A decision might effectively suppress issues that pose a latent or obvious challenge to the values or interests of the dominant group.

The first two dimensions of power share a common notion of CoIs, in that power can be “observed” if there is a conflict of preferences. In essence, power in the first and second dimension can only be observed under certain conditions and described as decision-making (or lack thererof) on declared issues.

In the third dimension of power, such CoIs have been smoothed by persuasion/influence. It concerns forms of power that do not necessarily involve overt conflicts. The most effective and insidious use of power is to prevent such conflicts from arising in the first place. In the third dimension, power is exercised by preventing grievances, by shaping cognitions and preferences so as to make seem that the status quo is unchangeable – and indeed beneficial.

Indeed, is it not the supreme exercise of power to get another or others to have the desires you want them to have that is, to secure their compliance by controlling their thoughts and desires? (pg. 27)

Stephen Lukes (2005)

Lukes refers to the power that social institutions have to reflect the values of a few groups at the expense of others. Influencing organisational members values is a way of obscuring conflict of interests. In this view ‘culture’ is a means to eliminate or resolve resistance where the dominated groups adhere to values established by the dominant groups.

While the first two dimensions focus on observable conflict of interests, the third dimension of power stresses the notion of latent conflicts being expressed by a contradiction between the interests of the dominant groups and those of the excluded, which remain unexpressed or become through persuasion, unconscious. This third dimension of power is at times referred to as social “myths”.

A generic schema suggested by Lukes has been adapted to code the various statements collected from multiple stakeholders in different settings. This is called the ‘power matrix’ and our focus will be mainly on the third dimension.

We identified a number of ‘myths’ that we define as either beliefs on the status quo or expectations of how human-automation interaction might evolve. While these expressions were grounded in some sort of plausible narrative, they are more a ‘Falk stories’ than a reflection of the status quo. We have collected evidence from aviation domain experts and from specialized literature where there seems to be quite some agreement that they’ll never come true. Allegedly, the role of these myths is to resolve conflict of interests, and to secure the acceptance of current and future trend in automation by those stakeholders who will have to ‘make it work’. A limited number will be listed along with ‘counter evidence’.

Myths as expression of the third dimension of power

- Human is the limit—as in ‘human are bed monitors’—Counter argument/evidence : human are sense makers but sense making is inhibited by tasks-artifacts that make monitoring meaningless. See also Iatrogenic effects of man-designed monitoring tasks

- Master-slave relationship where human is the master. Also human centered automation. Counter evidence is the belief that automation will deskill people “So if ATM becomes more mature, and the system support grows, then the level of skills that we ask from the controllers will reduce”. This cannot be called ‘supporting the human’, nor be conducive of high level strategic decision making

- Resistance to change. Counter-evidence: that automation is not trusted because not tuned and too many False Alarms and often doesn’t deliver what it promises (Baumgartner)

- LoA. This notion assumes a linear orderly progression ignores messiness of actual automation, no overall plan, no strategy

- LoA and “Graceful degradation”: human will slowly be out of the ‘picture’. The myth is that this is assumed to be a linear process and autonomy is ranked as progress. Counter evidence: this myth ignores the issue of coordination

- You can decompose and allocate without changing the whole: Decomposition and allocation creates new requirements and the result is that human tend to be pushed out of control loops

- We can rationally engineer automation by applying sound/validated design principles (like MABA MABA)

- Human as an active/participant monitor. Counter evidence: Many examples of automation shows that monitoring degrades

- Automation decreases knowledge requirements. Counter evidence is that it changes the set of skills needed to monitor the process

Conclusions

- The effective and successful implementation of highly integrated human-machine work systems is achievable only by managing power relations that influence the acceptance of the change

- Power relations play a significant part of the management of change. The path dependency that has influenced the evolution, when seen through a lens of power relations, clearly indicates that the barriers to implementation stem from the conflicting needs and understanding of what automation means. For example, human system integration is fundamentally different from automatic control – thus control systems engineering or Wiener style cybernetics approaches to automation philosophies are missing an essential facet of today’s work systems

- Technology offers ways to integrate ie strengthen or to create dependencies thus increasing complexities. This raises new requirements for new inter-organisational power relations including highest institutions like ICAO, supranational regulatory bodies, manufacturing industry and Operators. The scope of automation decisions appeared to go well beyond the boundary of design communities.