Digitalisation – A functional view of humans in digitalised socio technical system: some thoughts

Dr. Paola Amaldi

Senior Lecturer and module leader in Human Factors, Cognitive Ergonomics and Safety Management for the MSc Program in Industrial and Work Psychology and for undergraduate work occupational psychology.

In recent years both NATS and CAA UK have been investing some effort to elicit from aviation stakeholders (pilots, ATCOs, airliner, regulators, engineers manufacturers) main concerns about the present and future automation scenarios in Civil Aviation. Various techniques from Delphi, to brainstorming, open ended questionnaires, round table discussions, have generated a wealth of statements encapsulating these views. We have scrutinised these data with two objectives: a) identify main themes, as they were raised by different groups of stakeholders and b) characterise the nature of the debate through a cultural analysis of power relations.

The output of these analysis is discussed in a separate contribution, we shall at present highlight a particular set of concerns raised that prompted us to make some reflections on the role of human factors/cognitive engineering in the design of human involvement in present and future ATM scenarios.

Interconnectedness, sharing, dependencies and liability/accountability have been frequently voiced concerns reflecting to a point, current trend in automation plans for ATM which seems to be characterised by an increase in interconnectedness and by a will to share information among potentially competing stakeholders. We shall argue that these complex scenarios might require stakeholders to become better ‘system thinkers’.

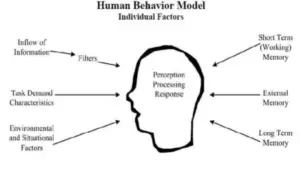

With regard to the ‘human factor’, for example, we need to think about humans more as system components, being mutually constituted in relation to other components rather than just in interaction with the environment. The picture below illustrates the focus on the mind and its interaction with the task-oriented, environment.

Although phrases like ‘total system’ ‘holistic approach’ are commonly advocated as needed to study and/or plan for complex scenarios, they are usually grasped in only part of their deeper meaning. This is the case for example, when it comes to the recommendation of taking into account various stakeholders’ needs and constraints. ‘System thinking’ however refers also to the interdependencies and mutually constitutive nature of system components so that a ‘system approach’ to, say, a technology upgrade would start with deciding on things like the ‘workable’ boundaries of the system, including components that might indirectly be involved/affected, hidden dependencies and possible side effects.

How would then ‘system thinking’ work for cognitive engineering/human factors? Humans are generally treated as a ‘module’ interacting with others. Because of the presumed ‘intrinsic’ characteristics of the humans, these interactions might lead to errors—say because of faulty display design—or to a successful achievement of the task say, thanks to human ingenuity and adaptive responses. Because of this dual nature of the human ‘module’, it has been advocated a ‘human centred’ design taking thus the human characteristics as a sort of constraints and potentiality on which to base the requirements of the design of human-machine system and ultimately human machine integration. While not challenging the ‘human centred ‘approach, we suggest a view which might turn out to be more conducive of understanding dependencies and interactions within highly interconnected complex systems. Let us for a moment imagine that humans are neither a limit nor a potential but rather a functional component of the system whose behavior has evolved as a reflection of the historical and cultural conditions that render organisational goals, values and practices intelligible. Further let’s assume that humans are natural sense making and mean seeking beings. Boredom, for example, could then be seen as a failure to find meaning –or develop internal motivation as Hancock (2013) puts it- in repetitive activities (see also Drury, 2015). On the other hand humans manage to make sense of simple actions like writing on flight paper strips and making them functional in ways not anticipated by the designers. One of the problems in designing digital equipment, is that engineers failed to take into account the human sense making skills. For example when designing electronic flight strips, this resulted in a failure to capture the meaning or the function of simple gestures like placing a (tilted) strip on the paper strip bay.

If these assumptions held, it appears that functional –sometimes called ‘adaptive’– behavior reflects the best meaning humans can make out of what they are expected to achieve, given the broad conditions under which they operate. However such adaptation, alias ‘ingenuity’ are not endogenously created, rather they are the reflection of historical conditions in which those practices developed. Dysfunctional behavior would then not be exclusively the result of some intrinsic human limitations, but more likely the consequences of changes (in values or practice) of some parts the system such that the dysfunctional behavior becomes inadequate in the eye of those who own the changes. This view would help us focusing on unspoken dependencies and interactions among components of the larger system, and this might turn out to be a better leverage for change, in the face of the often-declared failure to understand, predict and ultimately change human behavior.

An instance of the narrow and somewhat helpless focus on individual functioning in high-tech systems, is provided by comments/insights/analysis generated around the AF447 flight accident. Without denying that vast coverage over a number of ‘contributing factors’ provided by various reports, reportage and interviews, there has been a certain insistence on the decision making processes of the crew at certain crucial times, as when Capt Dubois decided to take a rest just before the crossing of the turbulence zone (“The decision he made, in his mind, he was quite happy with”) . And yet a cursory look at the ‘culture’ of automation including quotes from our study on ATM stakeholders shows signs of a marked preference for the pilots not to intervene, and for the pilots to trust automation. If so, then Capt Dubois decision to leave, given the trust in the highly automated piloting functions, is hardly surprising.

When it comes to defining the role of operators in highly tech system, we might need a sort of ‘Copernican shift’ away from putting the human mind at the center (see Fig. 1) of the information processing universe, but closer to it being a functional component of a complex web of interactions. Mainstream literature in cognitive engineering and in cognitive work analysis suggests two main questions to be raised when aiming at changing behavior of the human component of the system: (i) what is or has been the function of the to-be-changed behavior? And (ii) what are the historical antecedents that made that behavior one of the possible adaptive responses?

Therefore, changing the behavior of the human component might warrant an understanding of how other components, which might be ‘soft’ like culture, values, pressures, or ‘hard’ like procedures, hardware, software, have made in the past the to-be-changed behavior plausible, intelligible if not functional. Human cognition does not just interact with, but is a reflection of, the institutional order, and cultural and historical praxis. How human beings will adapt to or adopt the required changes, will somewhat be shaped by how other interacting components will change to make those adaptions/adoptions functional and meaningful with respect to a broader context that often goes well beyond that considered by designers, procurers and regualtors. Unintended or side effects seem then to be the outcome of a narrow focus at the expense of those broader but overlooked dependencies which yet appear to be crucially involved in the achievement of system’s objectives. This is well mirrored by the worry expressed by many stakeholders in our study, about the inadequate understanding of the role played by these dependencies. The challenges ahead require us to become better system thinkers.

References

Drury, C. G. (2015). Sustained attention in operational settings. In J.Szalma, M. Scerbo, P.Hancock, R. Parasuraman, & R. Hoffman (Eds.),Cambridge handbook of applied perception research. Cambridge, England:Cambridge University Press

Hancock, P. A. (2013). In search of vigilance: The problem of iatrogenically created psychological phenomena. American Psychologist, 68(2), 97.